Deep Dive into HashTable, HashMap & ConcurrentHashMap Performance

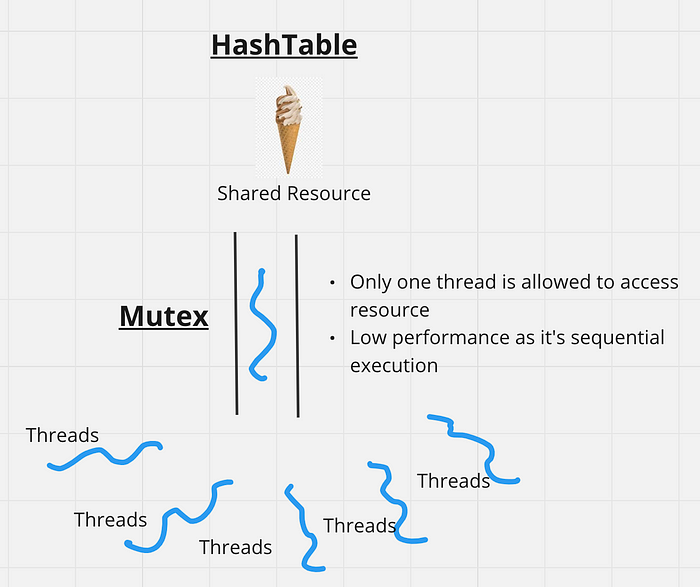

HashTable

HashTable uses mutex for all operation which makes them completely thread safe.

🔸 Concurrent reads/writes are synchronised leading to data consistency

🔸 Low performance due to only one thread is allow to access map leading to sequential execution of tasks

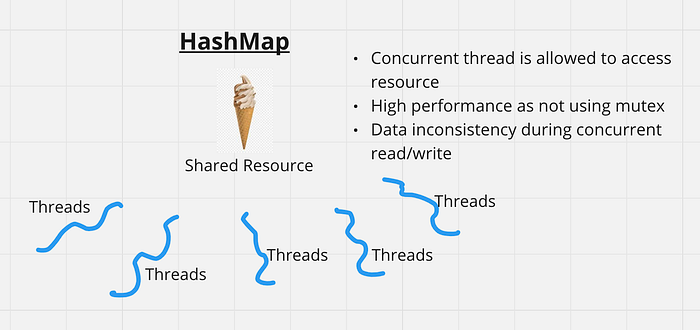

HashMap

HashMap is not thread safe, concurrent access of resource is allowed.

🔸 Concurrent reads/writes are allowed which can lead to data inconsistency

🔸 High performance as not using mutex, parallel execution of all thread.

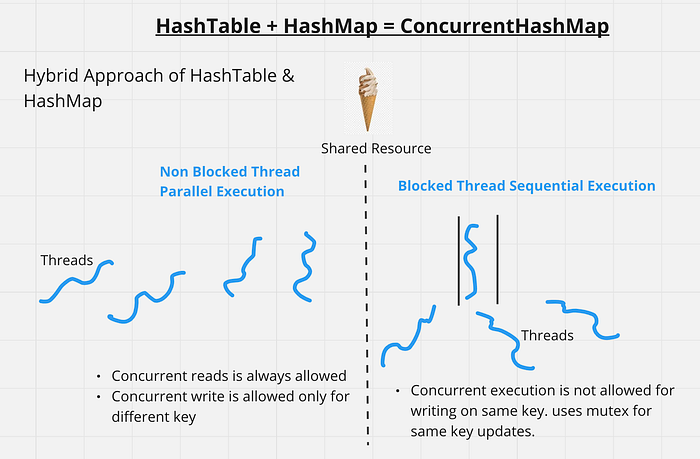

ConcurrentHashMap

ConcurrentHashMap is a thread safe due to hybrid approach of hashtable & hashmap as only uses mutex when required.

🔸 Concurrent read operation is always allowed

🔸 Concurrent different key write is allowed

🔸 Concurrent same key write uses mutex for data consistency

🔸 Performance is high for concurrent reads/write. Low performance for same key write.

We can conclude that hashtable is low performance but maintain high consistency whereas hashmap is high performance but comes at a cost of data inconsistency. ConcurrentHashMap can provide high performance & uses mutex to maintain data consistency for same key updates.

Performance Test

Let start coding to do performance test of all the map. feel free to try the code in language of your choice as it’s doesn’t matter.

Steps:

🔸 Define three map hashtable, hashMap & concurrentHashMap. All the map having two keys “key1” & “key2”.

🔸 Concurrent write operation on each map. we are updating key1 & key2 value at same time by different thread.

🔸 One write operation takes 2 sec to complete. check “writeFor2Sec” method as we are sleeping thread for 2 sec while updating map.

🔸 Note down time to complete write operation on each map. check “checkTimeOfExecution” method invoking all the parallel thread execution on map & calculating time taken to complete task.

public static void main(String[] args) throws Exception {

// creating thread pool of size 2

ExecutorService service = Executors.newFixedThreadPool(2);

// defining hashtable, hashmap & concurrentHashMap

Hashtable<String, String> hashtable = new Hashtable<>();

HashMap<String, String> hashMap = new HashMap<>();

ConcurrentHashMap<String, String> concurrentHashMap = new ConcurrentHashMap<>();

// Putting key value pair in all maps

hashtable.put("key-1", "value-1");

hashtable.put("key-2", "value-2");

hashMap.put("key-1", "value-1");

hashMap.put("key-2", "value-2");

concurrentHashMap.put("key-1", "value-1");

concurrentHashMap.put("key-2", "value-2");

Collection<Callable<String>> allOperations = new ArrayList<>();

/**

* Creating two thread in each map to update separate key. each write operation will take 2 sec to complete.

*/

// Update keys & check time of execution for hashtable if write operation take 2 sec

allOperations.add(writeFor2Sec(hashtable, "key-1", "value-3"));

allOperations.add(writeFor2Sec(hashtable, "key-2", "value-4"));

checkTimeOfExecution(service, allOperations, "HASH_TABLE");

// Update keys & check time of execution for hashmap if write operation take 2 sec

allOperations = new ArrayList<>();

allOperations.add(writeFor2Sec(hashMap, "key-1", "value-3"));

allOperations.add(writeFor2Sec(hashMap, "key-2", "value-4"));

checkTimeOfExecution(service, allOperations, "HASH_MAP");

// Update keys & check time of execution for concurrenthashmap if write operation take 2 sec

allOperations = new ArrayList<>();

allOperations.add(writeFor2Sec(concurrentHashMap, "key-1", "value-3"));

allOperations.add(writeFor2Sec(concurrentHashMap, "key-2", "value-4"));

checkTimeOfExecution(service, allOperations, "CONCURRENT_HASHMAP");

}

private static void checkTimeOfExecution(ExecutorService service, Collection<Callable<String>> allOperations,

String MapName)

throws InterruptedException {

long startTime = System.currentTimeMillis();

// invoking all thread operations

service.invokeAll(allOperations);

long endTime = System.currentTimeMillis();

int timeTaken = (int) ((endTime - startTime)/1000);

System.out.println(String.format("%s took %s seconds to complete write operations", MapName, timeTaken));

}

/**

* Executing map put operation after 2 sec delay

*/

private static Callable<String> writeFor2Sec(Map<String, String> map, String key, String value) {

Callable<String> writeFor2Sec = () -> map.computeIfPresent(key, (k, v) -> {

try {

sleep(2000);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

return value;

});

return writeFor2Sec;

}Assumption

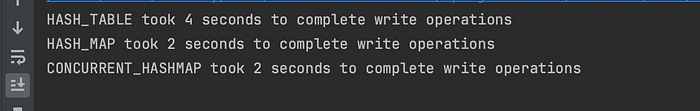

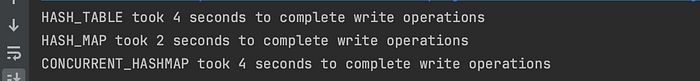

First we should do result assumption before running code.

One write operation takes 2 sec & we are doing two parallel write operation on different key for each map.

HashTable:

Completely synchronised in nature so only one thread is allowed to access map at a time irrespective of key. since we have two parallel execution it will get executed as sequential.

Total Time = Time taken by first thread + time taken by second thread.

Total Time = 2 + 2 = 4 sec

HashMap:

Concurrent execution allowed without any synchronisation both thread should execute at same time.

Total Time = Max(Time taken by first thread, time taken by second thread)

Total Time = Max(2,2) = 2 sec

ConcurrentHashMap:

For different key concurrentHashMap allow concurrent updates so it should take same time as hashmap.

Total Time = Max(Time taken by first thread, time taken by second thread)

Total Time = Max(2,2) = 2 sec

Output:

Case: Concurrent Write Operation on same key

🔸 Code is same as above now we are just writing concurrently on same key. every map we are updating “key-1” by two threads concurrently.

public static void main(String[] args) throws Exception {

// creating thread pool of size 2

ExecutorService service = Executors.newFixedThreadPool(2);

// defining hashtable, hashmap & concurrentHashMap

Hashtable<String, String> hashtable = new Hashtable<>();

HashMap<String, String> hashMap = new HashMap<>();

ConcurrentHashMap<String, String> concurrentHashMap = new ConcurrentHashMap<>();

// Putting key value pair in all maps

hashtable.put("key-1", "value-1");

hashMap.put("key-1", "value-1");

concurrentHashMap.put("key-1", "value-1");

Collection<Callable<String>> allOperations = new ArrayList<>();

/**

* Creating two thread in each map to update same key. each write operation will take 2 sec to complete.

*/

// Update keys & check time of execution for hashtable if write operation take 2 sec

allOperations.add(writeFor2Sec(hashtable, "key-1", "value-3"));

allOperations.add(writeFor2Sec(hashtable, "key-1", "value-4"));

checkTimeOfExecution(service, allOperations, "HASH_TABLE");

// Update keys & check time of execution for hashmap if write operation take 2 sec

allOperations = new ArrayList<>();

allOperations.add(writeFor2Sec(hashMap, "key-1", "value-3"));

allOperations.add(writeFor2Sec(hashMap, "key-1", "value-4"));

checkTimeOfExecution(service, allOperations, "HASH_MAP");

// Update keys & check time of execution for concurrenthashmap if write operation take 2 sec

allOperations = new ArrayList<>();

allOperations.add(writeFor2Sec(concurrentHashMap, "key-1", "value-3"));

allOperations.add(writeFor2Sec(concurrentHashMap, "key-1", "value-4"));

checkTimeOfExecution(service, allOperations, "CONCURRENT_HASHMAP");

}Assumption

HashTable:

Completely synchronised in nature same result as before.

Total Time = Time taken by first thread + time taken by second thread.

Total Time = 2 + 2 = 4 sec

HashMap:

Concurrent execution allowed without any synchronisation same result as before.

Total Time = Max(Time taken by first thread, time taken by second thread)

Total Time = Max(2,2) = 2 sec

ConcurrentHashMap:

ConcurrentHashMap do synchronisation for concurrent same key write.

so time taken will be same as hashtable.

Total Time = Time taken by first thread + time taken by second thread.

Total Time = 2 + 2 = 4 sec

Output:

It’s interesting to see concurrent hash map is supporting both synchronisation & concurrent access.

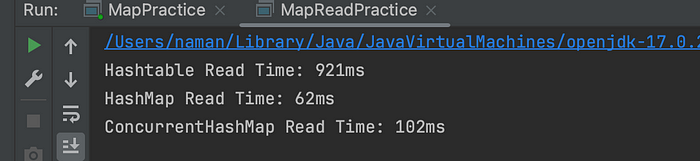

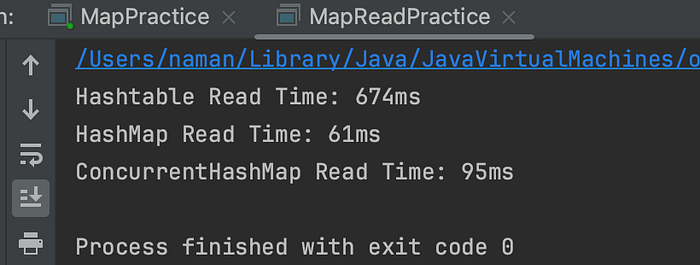

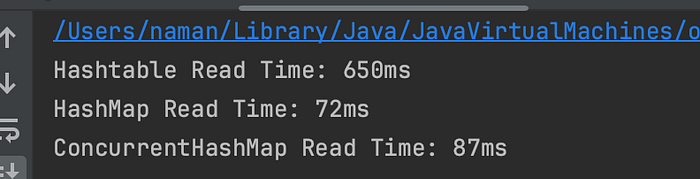

Case: Concurrent Read Operation

🔸 Populate 1 million record in map

🔸 Concurrent run 10 thread each doing 1 million read operation

🔸 Checking execution time to complete read operation

private static final int NUM_THREADS = 10;

private static final int NUM_OPERATIONS = 1000000;

public static void main(String[] args) throws InterruptedException {

Hashtable<Integer, String> hashtable = new Hashtable<>();

HashMap<Integer, String> hashMap = new HashMap<>();

ConcurrentHashMap<Integer, String> concurrentHashMap = new ConcurrentHashMap<>();

// Populate map with 1 million value

for (int i = 0; i < NUM_OPERATIONS; i++) {

hashtable.put(i, "Value " + i);

hashMap.put(i, "Value " + i);

concurrentHashMap.put(i, "Value " + i);

}

long startTimeHashtable = System.currentTimeMillis();

runReadOperations(hashtable);

long endTimeHashtable = System.currentTimeMillis();

System.out.println("Hashtable Read Time: " + (endTimeHashtable - startTimeHashtable) + "ms");

long startTimeHashMap = System.currentTimeMillis();

runReadOperations(hashMap);

long endTimeHashMap = System.currentTimeMillis();

System.out.println("HashMap Read Time: " + (endTimeHashMap - startTimeHashMap) + "ms");

long startTimeConcurrentHashMap = System.currentTimeMillis();

runReadOperations(concurrentHashMap);

long endTimeConcurrentHashMap = System.currentTimeMillis();

System.out.println("ConcurrentHashMap Read Time: " + (endTimeConcurrentHashMap - startTimeConcurrentHashMap) + "ms");

}

/**

* Running 10 threads in parallel each reading 1 million record

*/

private static void runReadOperations(java.util.Map<Integer, String> map) throws InterruptedException {

Thread[] threads = new Thread[NUM_THREADS];

for (int i = 0; i < NUM_THREADS; i++) {

threads[i] = new Thread(() -> {

for (int j = 0; j < NUM_OPERATIONS; j++) {

map.get(j);

}

});

}

for (Thread thread : threads) {

thread.start();

}

for (Thread thread : threads) {

thread.join();

}

}Assumption

Hashtable is synchronised resulting each thread blocking the whole map while executing. time of execution for hashtable will be high.

Hashmap allow all concurrent read so execution time will be low.

ConcurrentHashMap also allow all concurrent read so execution time will be low relatively same as hashmap.

Output(Running code multiple times):

we can see hashtable is performing poorly but hashmap & concurrent hash map have high performance.